Configure for Secure Impersonation

In a Hadoop environment, secure impersonation enables the Designer Cloud Powered by Trifacta platform and its users to act as the signed-in user when performing actions on Hadoop. When enabled, you can leverage the permissions infrastructure in your Hadoop cluster to control privacy level, collaboration, and data sharing for your user base. For the Alteryx user, their jobs and job outputs are owned by the specified Alteryx user, instead of the Hadoop user [hadoop.user]. The Alteryx system account is required even in secure impersonation mode.

This configuration is optional.

For more information on Hadoop secure impersonation, see http://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-common/Superusers.html.

Please complete these steps to to enable secure impersonation.

Note

Alteryx secure impersonation requires Kerberos to be applied to the Hadoop cluster. However, you can use Kerberos without enabling secure impersonation, if desired. See Configure for Kerberos Integration.

Users and groups for secure impersonation

On the Hadoop cluster, the Designer Cloud Powered by Trifacta platform requires a common Unix or LDAP group containing the [hadoop.user (default=trifacta)] and all Alteryx users.

Note

In UNIX environments, usernames and group names are case-sensitive. Please be sure to use the case-sensitive names for users and groups in your Hadoop configuration and Alteryx configuration file.

Note

If the HDFS user has restrictions on its use, it is not suitable for use with secure impersonation. Instead, you should enable HttpFS and use a separate HttpFS-specific user account instead. For more information, see Configure for Hadoop.

Assuming this group is named trifactausers:

Create a Unix or LDAP group

trifactausersMake user

[hadoop.user]a member oftrifactausersVerify that all user principals that use the platform are also members of the

trifactausersgroup.

Hadoop configuration for secure impersonation

In your Kerberos configuration, you must configure the user [hadoop.user] as a secure impersonation proxy user for Alteryx users.

Note

The following addition must be made to your Hadoop cluster configuration file. This file must be copied to the Trifacta node with the required other cluster configuration files. See Configure for Hadoop.

In core-site.xml on the Hadoop cluster, add the following configuration, replacing the values for [hadoop.user] and [hadoop.group (default=trifactausers)] with the values appropriate for your environment:

<!-- Trifacta secure impersonation -->

<property>

<name>hadoop.proxyuser.[hadoop.user].hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.[hadoop.user].groups</name>

<value>[hadoop.group]</value>

</property>HDFS Directories

Verify that the shared upload and job results directories are owned and writeable to the trifactausers group.

For more information on HDFS directories and their permissions, see Prepare Hadoop for Integration with the Platform.

Stricter directory permissions in an impersonated environment

By default, the directories and sub-directories of the locations for uploaded data and job results are set to 730 in an environment with secure impersonation enabled. This configuration allows impersonated users to do the following:

Note

Stricter permissions sets can adversely affect users' ability to access shared flows.

The

7user-permission implies that individual users have full permissions over their own directories.Individual users can read only data in their own upload directory below

/trifacta/uploads.

The

3user-permission is used because the top-level directory is owned by the[hadoop.user]user. Each impersonated user in thetrifactausersgroup requires write and execute permissions on their own directory to create it and manage it. This permission set implies that thetrifactausersgroup has read and execute permissions over a user's upload directory.Without access-level controls, these permissions are inherited from the parent directory and have the following implications:

Since impersonated group users have execute permissions, they can list all directories in this area.

Since impersonated group users have write permissions, they can theoretically write to any other user's upload directory, although this directory is not configurable.

Within the upload area, each user of the Designer Cloud Powered by Trifacta platform is assigned an individual directory. For simplicity, the permissions on these directories are automatically applied to the sub-directories. In an impersonated environment, an individual directory is owned by the Hadoop principal for the user, so if two or more users share the same Hadoop principal, they have theoretical access to each others' directories. This simple scheme can be replaced by a more secure method using access-level controls.

Note

To enable these stricter permissions, access-level controls must be enabled on your Hadoop cluster. For more information, please see the documentation for your Hadoop distribution.

If access-level controls are enabled for your impersonated environment, you can apply stricter permissions on these sub-directories for additional security.

Steps:

The following steps apply 730 permissions to the top-level directory and 700 to all user sub-directories. With these stricter permissions on sub-directories, no one other than the user, including the trifacta user, can access the user's sub-directory.

The

/trifacta/uploadsvalue is the default value for upload location in HDFS.In an individual deployment, the directory setting is defined in platform configuration. You can apply this change through the Admin Settings Page (recommended) or

trifacta-conf.json. For more information, see Platform Configuration Methods. Locate the value forhdfs.pathsConfig.fileUpload.Note

The following steps can also be applied to the directory where job results are written. By default, this directory is

/trifacta/queryResults. For more secure controls over job results, you should also retrieve the value forhdfs.pathsConfig.batchResults.Replace the values in the following steps with the value from your configuration.

Before you begin, you should consider resetting all access-level controls on the upload directories and sub-directories:

hdfs dfs -setfacl -b /trifacta/uploads

The following command removes the application of the permissions from the

uploadsdirectory and any sub-directory to members of the default group. So, an individual group member's permissions are not automatically shared with the group:hdfs dfs -setfacl -R -m default:group::--- /trifacta/uploads

The following command is required to enable all users to access dictionaries:

hdfs dfs -setfacl -R -m default:group::rwx /trifacta/uploads/0

The following step sets the permissions at the top level to

730:hdfs dfs -chmod 730 /trifacta/uploads

Sub-directory permissions are a combination of these permissions and any relevant access-level controls.

Apply to queryResults directory: Repeat the above steps for the

/trifacta/queryResultsdirectory as needed.ACL for Hive: If you need to apply access controls to Hive, you can use the following:

hdfs dfs -setfacl -R -m default:user:hive:rwx /trifacta/queryResults

User directories for YARN

For YARN deployments, each Hadoop user must have a home directory for which the user has write permissions. This directory must be located in the following location within HDFS:

/user/<username>

where:

<username>is the Hadoop principal to use.

Note

For jobs executed on the default Trifacta Photon running environment, user output directories must be created with the same permissions as you want for the transform and sampling jobs executed on the server. Users may be able to see the output directories of other users, but output job files are created with the user umask setting (hdfs.permissions.userUMask), as defined in platform configuration.

Example for Hadoop principal myUser:

hdfs dfs -mkdir /user/myUser hdfs dfs -chown myUser /user/myUser

Optional:

hdfs dfs -chmod -R 700 /user/myUser

Alteryx configuration for secure impersonation

You can apply this change through the Admin Settings Page (recommended) or trifacta-conf.json. For more information, see Platform Configuration Methods.

Set the following parmeter to true.

"hadoopImpersonation" : true,

If you have enabled the Spark running environment for job execution, you must enable the following parameter as well:

"spark-job-service.sparkImpersonationOn" : true,

For more information, see Configure Spark Running Environment.

Umask permissions

Under secure impersonation, the Designer Cloud Powered by Trifacta platform utilizes two separate umask permission sets. If secure impersonation is not enabled, the Designer Cloud Powered by Trifacta platform utilizes the systemUmask for all operations.

Note

Umask settings are three-digit codes for defining the bit switches for read, write, and execute permissions for users, groups, and others (in that order) for a file or directory. These settings are inverse settings. For example, the umask value of 077 enables read, write, and execute permissions for users and disables all permissions for groups and others. For more information, see https://en.wikipedia.org/wiki/Umask.

Name | Property | Description |

|---|---|---|

userUmask | hdfs.permissions.userUMask | Controls the output permissions of files and directories that are created by impersonated users. These permissions define private permission settings for individual users. |

systemUmask | hdfs.permissions.systemUmask | Controls the output permissions of files and directories that are created by the Alteryx system user. These permissions also control resources for the admin user and resources that are shared between Alteryx users. |

Notes:

In a secure impersonation environment, systemUmask should be defined as

027(the default value), which enables read access to shared resources for all users in the Alteryx group.For greater security, it is possible to set the userUmask to

077, which locks down individual user directories under/trifacta/queryResults. However, secure impersonation requires more permissions on the systemUMask to enable sharing of resources.Please note that the permission settings for the admin user are controlled by systemUmask.

Provisioning impersonated users

Note

A newly created user in the platform cannot log in unless provisioned by a platform administrator, even if self-registration is enabled. The administrator must apply the Hadoop principal to the account.

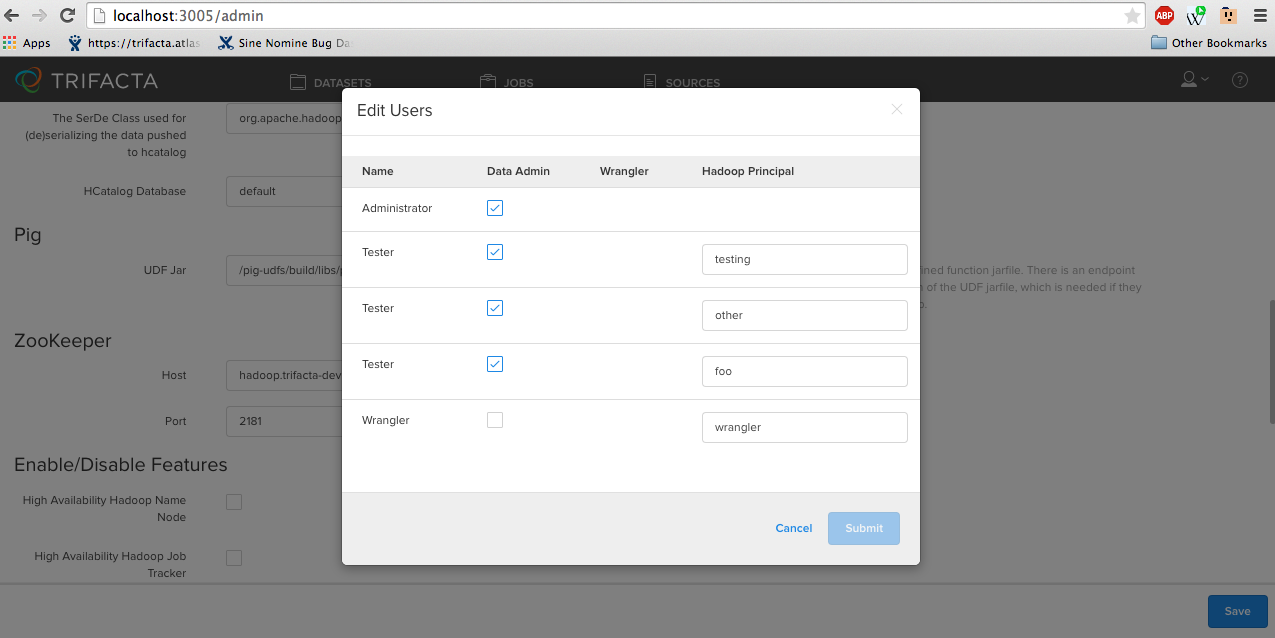

To provision users as admin, log in and visit the Admin Settings page from the drop-down menu on the top right. Locate the Users section and click Edit Users. If you have the Secure Hadoop Impersonation flag on with Kerberos enabled, you should see a Hadoop Principal column. From here, you can assign each user a Hadoop principal. Multiple users can share the same Hadoop principal, but each Alteryx user must have a Hadoop principal assigned to them.

|

Figure: Editing users