Spark Execution Properties Settings

When you specify a job in the Run Job page, you may pass to the Spark running environment a set of Spark property values to apply to the execution of the job. These property values override the global Spark settings for your deployment.

Note

A workspace administrator must enable Spark job overrides and configure the set of available parameters. For more information, see Enable Spark Job Overrides.

Spark overrides are applied to individual output objects.

You can specify overrides for ad-hoc jobs through the Run Job page.

You can specify overrides when you configure a scheduled job execution.

User-specific Spark overrides: If you have enabled user-specific overrides for Spark jobs, those settings take precedence over the settings that are applied through this feature. For more information, see Configure User-Specific Props for Cluster Jobs.

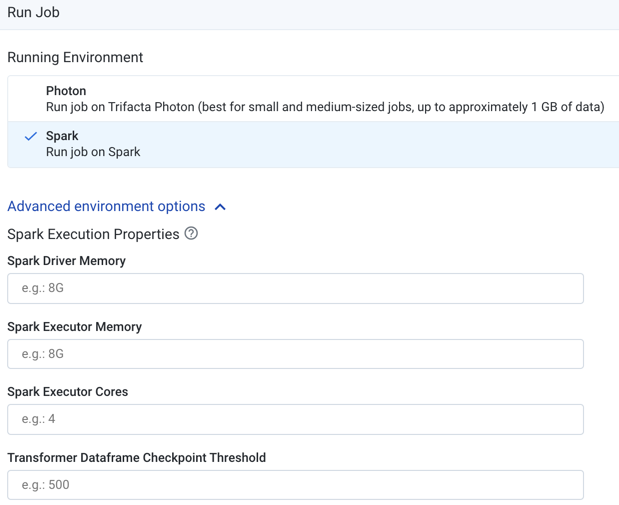

In the Run Job page, click the Advanced Execution Settings caret.

Figure: Spark Execution Properties

Default Spark overrides:

The first four properties are available for all Spark job overrides:

Warning

Before you modify these parameters, you should review with your cluster administrator what are appropriate settings for each parameter. In some cases, you can set these values to cause failures on the cluster. No validation is performed for inputted values.

Spark parameter | Description |

|---|---|

Spark Driver Memory | Amount of physical RAM in GB on each Spark node that is made available for the Spark drivers. By raising this number:

|

Spark Executor Memory | Amount of physical RAM in GB on each Spark node that is made available for the Spark executors. By raising this number:

|

Spark Executor Cores | Number of cores on each Spark executor that is made available to Spark. By raising this number:

|

Transformer Dataframe Checkpoint Threshold | When checkpointing is enabled, the Spark DAG is checkpointed when the approximate number of expressions in this parameter has been added to the DAG. Checkpointing assists in managing the volume of work that is processed through Spark at one time; by checkpointing after a set of steps, Designer Cloud Powered by Trifacta Enterprise Edition can reduce the chances of execution errors for your jobs. By raising this number:

|

For more details on setting these parameters, see Tune Cluster Performance.

Other Spark overrides:

Your workspace administrator may have enabled other Spark properties to be overridden. These parameters appear at the bottom of the list.

Please check with your administrator for appropriate settings for these properties.