Diagnose Failed Jobs

Use these guidelines and features to begin the process of diagnosing jobs that have failed.

Job Types

The following types of jobs can be executed in the Designer Cloud Powered by Trifacta platform:

Conversion jobs: Some datasources, such as binary file or JSON formats, must be converted to a format that can be easily read by the Trifacta Application. During data ingestion, the datasource is converted to a natively supported file format and stored on backend storage.

Transform job: This type of job executes the steps in your recipe against the dataset to generate results in the specified format. When you configure your job, any set of selected output formats causes a transform job to execute according to the job settings.

Profile job: This type of job builds a visual profile of the generated results. When you configure your job, select Profile Results to generate a profile job.

Publish job: This job publishes results generated by the platform to a different location or datastore.

Ingest job: This job manages the import of data from a JDBC source into the default datastore for purposes of running a transform or sampling job.

Tip

Information on failed plan executions is contained in the orchestration-service.log file, which can be acquired in the support bundle. For more information, see Support Bundle Contents.

Note

For each collected sample, a sample job ID is generated. In the Samples panel, you can view the sample job IDs for your samples. These job IDs enable you to identify the sample jobs in the Sample Jobs page.

Identify Job Failures

When a job fails to execute, a failure message appears in the Job History page:

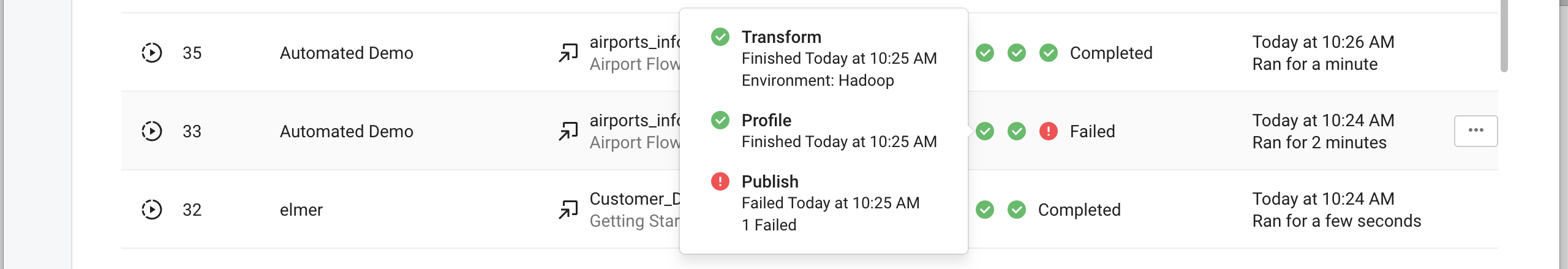

|

Figure: Publish job failed

In the above example, the Transform and Profile jobs completed, but the Publish job failed. In this case, the results exist and, if the source of the problem is diagnosed, they can be published separately.From the job's context menu, select Download Logs. You can download the jobs logs to look for reasons for the failure. See below.

Invalid file paths

When your job uses files as inputs or outputs, you may receive invalid file path errors. Depending on the backend datastore, these can be one of the following:

Path to the file is invalid for the current user. Path may be been created by another user that had access to the location.

Path contains invalid characters in it. For more information, see Supported File Formats.

Resource was deleted.

Jobs that Hang

In some cases, a job may stay in a pending state indefinitely. Typically, these errors are related to a failure of the job tracking service. You can try to the following:

Resubmit the job.

Have an administrator restart the platform. See Start and Stop the Platform.

Submit the job again.

Spark Job Error Messages

The following error messages may appear in the Trifacta Application when a Spark job fails to execute.

"Aggregate too many columns" error

Your job could not be completed due to one or more Pivot, Window or other Aggregation recipe steps having too many aggregate functions in the Values parameter.

Solution: Please split these aggregates across multiple Aggregation steps.

"Binary sort" error

Sorting a nested column such as an array or map is not supported.

Codegen error

Your job could not be completed due to the complexity of your recipe.

Tips:

Look to break up your recipe into sequences of recipes. You can chain recipes together one after another in Flow View.

If you have complex, multi-dataset operations, you should try to isolate these into smaller recipes.

Use sampling to checkpoint execution after complex steps.

"Colon in path" error

Your job references one or more invalid file paths. File and folder names cannot contain the colon character.

"Invalid input path" error

Your job references one or more invalid file paths. File names cannot begin with characters like dot or underscore.

"Invalid union" error

Union operations can only be performed on tables with compatible column types.

Tip

Edit the union in question. Verify that the columns are properly aligned and have consistent data types. For more information, see Union Page.

"Job service unreachable" error

There was an error communicating with the Spark Job Service.

Tip

An administrator can review the contents of the spark-job-service.log file for details. See System Services and Logs.

"Oom" error

When you encounter out of memory errors related to job execution, you should review the following general items related to your flow.

General Tips:

Review your recipes to see if you can identify ways to break them up into smaller recipes.

Operations such as joins and unions can greatly increase the size of your datasets.

Resource consumption is also affected by the the complexity of your recipe(s).

If you suspect that there are several jobs running in parallel, you can drop the job launch batch size to

2or1, which serializes job execution while preserving memory. For more information, see Configure Application Limits.You might be able to configure overrides to the Spark settings to allocate more memory for job execution.

This feature may need to be enabled in your environment. See Enable Spark Job Overrides.

"Path not found during execution" error

One or more datasources referenced by your job no longer exist.

Tip

Review your flow and all of its upstream dependencies to locate the broken datasource. Reference errors for upstream dependencies may be visible in downstream recipes.

"Too many columns" error

Your job could not be completed due to one or more datasets containing a large number of columns.

Tip

A general rule of thumb is to avoid over 1000 columns in your dataset. Depending on your environment, you may experience performance issues and job failures on narrower datasets.

"Version mismatch" error

The version of Spark installed on your Hadoop cluster does not match the version of Spark that the Designer Cloud Powered by Trifacta platform is configured to use.

Tip

For more information on the appropriate version to configure for the product, see Configure for Spark.

Databricks Job Errors

The following error messages are specific to Spark errors encountered when running jobs on Databricks.

Note

When a Databricks job fails, the failure is immediately reported in the Trifacta Application. Collection of the job log files from Databricks occurs afterward in the background.

Tip

A platform administrator may be able to download additional logs for help in diagnosing job errors.

"Runtime cluster" error

There was an error running your job.

"Staging cluster" error

There was an error launching your job.

Try Other Job Options

You can try to re-execute the job using different options.

Tips:

Disable flow optimizations. If your job is using data from a relational source that supports pushdowns, you can try to disable flow optimizations and then re-run the job. For more information, see Flow Optimization Settings Dialog.

Look to cut data volume. Some job failures occur due to high data volumes. For jobs that execute across a large dataset, you can re-examine your data to remove unneeded rows and columns of data. Use the Deduplicate transformation to remove duplicate rows.

Gather a new sample. In some cases, jobs can fail when run at scale because the sample displayed in the Transformer page did not include problematic data. If you have modified the number of rows or columns in your dataset, you can generate a new sample, which might illuminate the problematic data. However, gathering a new sample may fail as well, which can indicate a broader problem.

Change the running environment. If the job failed on Trifacta Photon, try executing it on another running environment.

Tip

The Trifacta Photon running environment is not suitable for jobs on large datasets. Use the default running environment to execute your job.

Review Logs

Job logs

In the listing for the job on the Job History page, click Download Logs to send the job-related logs to your local desktop.

Note

If encryption has been enabled for log downloads, you must be an administrator to see a clear-text version of the jobs listed below. For more information, seeConfigure Support Bundling.

When you unzip the ZIP file, you should see a numbered folder with the internal identifier for your job on it. If you executed a transform job and a profile job, the ZIP contains two numbered folders with the lower number representing the transform job.

job.log. Review this log file for information on how the job was handled by the application.

Tip

Search this log file for error.

Support bundle: If support bundling has been enabled in your environment, the support-bundle folder contains a set of configuration and log files that can be useful for debugging job failures.

Tip

Please include this bundle with any request for assistance to Alteryx Support.

For more information on configuring the support bundle, see Configure Support Bundling.

For more information on the bundle contents, see Support Bundle Contents.

Support logs

For support use, the most meaningful logs and configuration files can be downloaded from the application. Select Resources menu > Download logs.

Note

If you are submitting an issue to Alteryx Support, please download these files through the application.

For more information, see Download Logs Dialog.

The admin version of this dialog enables downloading logs by timeframe, job ID, or session ID. For more information, see Admin Download Logs Dialog.

Alteryx node logs

Note

You must be an administrator to access these logs. These logs are included when an administrator downloads logs for a failed job. See above.

On the Trifacta node, these logs are located in the following directory:

<install_dir>/logs

This directory contains the following logs:

batch-job-runner.log. This log contains vital information about the state of any launched jobs.webapp.log. This log monitors interactions with the web application.Issues related to jobs running locally on the Trifacta Photon running environment can appear here.

Hadoop logs

In addition to these logs, you can also use the Hadoop job logs to troubleshoot job failures.

You can find the Hadoop job logs at port 50070 or 50030 on the node where the ResourceManager is installed.

The Hadoop job logs contain important information about any Hadoop-specific errors that may have occurred at a lower level than the Trifacta Application, such as JDK issues or container launch failures.

Contact Support

If you are unable to diagnose your job failure, please contact Alteryx Support.