Guia de configuração do Motor Dataproc

Conecte seu espaço de trabalho do Alteryx One Platform à sua conta do Dataproc sem servidor para habilitar o Motor Dataproc. O Dataproc é um motor Spark distribuído que pode executar seus fluxos de trabalho do Designer Cloud se o espaço de trabalho estiver configurado com o GCS como armazenamento de dados privado. Siga estas etapas para habilitar o motor Dataproc no seu espaço de trabalho...

Pré-requisitos

Você deve ser um Administrador do espaço de trabalho no Alteryx One.

O espaço de trabalho do Alteryx One deve ser configurado com GCS como armazenamento de dados privado.

Uma conta de serviço do GCP para executar lotes do Dataproc (trabalhos).

Ter acesso administrativo ao projeto do GCP de destino.

Crie uma rede de VPC para todas as regiões que você deseja usar.

Defina a restrição

constraints/compute.requireOsLogincomofalseno projeto que deseja usar.

Guia de configuração do Motor Dataproc

Siga estas etapas para habilitar o motor Dataproc no seu espaço de trabalho do Alteryx One...

Contas de serviço do GCP

Existem dois tipos de contas de serviço de que você precisa...

Conta de serviço de armazenamento base para GCS. Você só precisará dessa conta se usar o modo de espaço de trabalho. O Alteryx One usa essa conta para acessar o GCS durante o tempo de design e cria lotes do Dataproc. A conta deve ter permissão para criar e monitorar lotes do Dataproc. Estas são as funções recomendadas...

Nota

Se você utiliza o modo de usuário, o Alteryx One não usa a conta de serviço de armazenamento base. Em vez disso, o Alteryx One usa sua identidade de SSO para iniciar o lote do Dataproc. No entanto, você precisa das mesmas funções listadas para a conta de serviço de armazenamento base.

Editor do Dataproc (

roles/dataproc.editor) no projeto no qual você deseja executar o Dataproc.Usuário da conta de serviço (

roles/iam.serviceAccountUser) na conta de serviço do Dataproc. Para obter mais informações, acesse a documentação de funções do GCS.

Conta de serviço do Dataproc. O Alteryx One transmite essa conta de serviço como um argumento ao criar um lote do Dataproc. Ela deve ter a função de trabalhador do Dataproc (

roles/dataproc.worker) no projeto em que está sendo executada.

Configuração de projeto do GCP

Defina a restrição constraints/compute.requireOsLogin como false no projeto do Google Cloud Platform (GCP) que deseja usar. Para obter mais informações, acesse a documentação de políticas do GCS.

Configuração de rede de VPC

Você deve ter uma rede de VPC configurada para executar trabalhos do Dataproc. Para obter mais informações sobre como configurar essa rede, acesse a documentação do Dataproc sem servidor.

Concluir a configuração

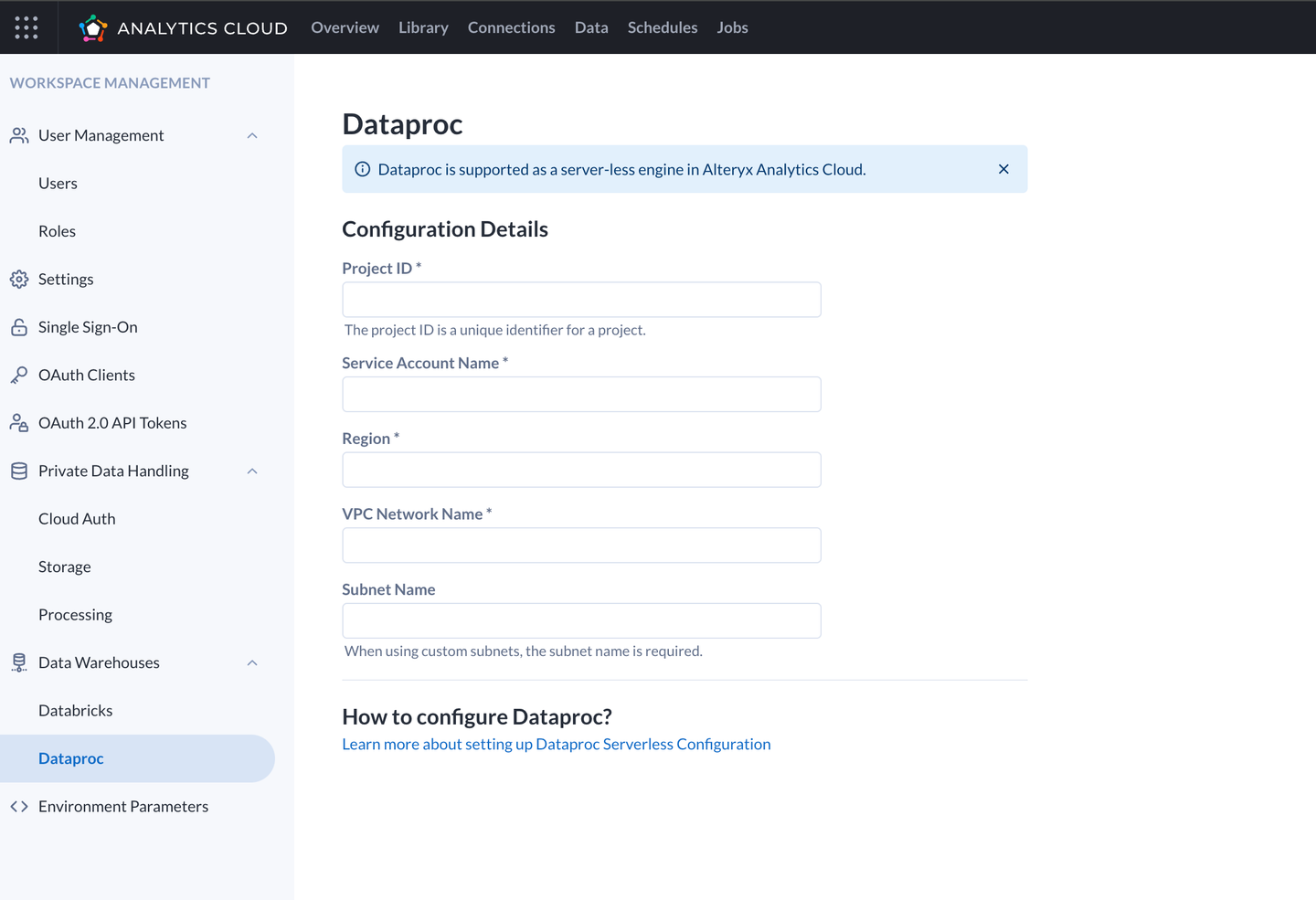

O administrador do espaço de trabalho pode configurar o Dataproc para seu espaço de trabalho usando o console de administração.

Vá para a seção Administrador do espaço de trabalho > Data Warehouses > seção Dataproc.

Preencher o formulário de configuração

ID do projeto | O lote do Dataproc é executado neste projeto do Google. |

Nome da rede de VPC | Uma rede de VPC é usada (nesse caso, uma rede com sub-redes automáticas é usada, portanto, o nome da sub-rede não precisa ser especificado explicitamente. Se a rede está configurada com sub-redes personalizadas, o nome da sub-rede também deve ser especificado no formulário). |

Região | Região onde o lote do Dataproc é executado. |

Nome da conta de serviço | A conta de serviço usada para executar o lote do Dataproc. Isso é especificado como um parâmetro no momento da inicialização e não é necessariamente a mesma conta de serviço que a do armazenamento base. |